ScaleStore:A Fast and Cost-Efficient Storage Engine using DRAM, NVMe, and RDMA.pdf

免费下载

ScaleStore: A Fast and Cost-Eicient Storage Engine

using DRAM, NVMe, and RDMA

Tobias Ziegler

Technische Universität

Darmstadt

Carsten Binnig

Technische Universität

Darmstadt

Viktor Leis

Friedrich-Alexander-Universität

Erlangen-Nürnberg

ABSTRACT

In this paper, we propose ScaleStore, a novel distributed storage

engine that exploits DRAM caching, NVMe storage, and RDMA

networking to achieve high performance, cost-eciency, and scala-

bility at the same time. Using low latency RDMA messages, Scale-

Store implements a transparent memory abstraction that provides

access to the aggregated DRAM memory and NVMe storage of all

nodes. In contrast to existing distributed RDMA designs such as

NAM-DB or FaRM, ScaleStore stores cold data on NVMe SSDs

(ash), lowering the overall hardware cost signicantly. The core

of ScaleStore is a distributed caching strategy that dynamically

decides which data to keep in memory (and which on SSDs) based

on the workload. The caching protocol also provides strong consis-

tency in the presence of concurrent data modications. Our evalua-

tion shows that ScaleStore achieves high performance for various

types of workloads (read/write-dominated, uniform/skewed) even

when the data size is larger than the aggregated memory of all

nodes. We further show that ScaleStore can eciently handle

dynamic workload changes and supports elasticity.

CCS CONCEPTS

• Information systems

→

Parallel and distributed DBMSs;

DBMS engine architectures.

KEYWORDS

Distributed Storage Engine, Transaction Processing, Flash, RDMA

ACM Reference Format:

Tobias Ziegler, Carsten Binnig, and Viktor Leis. 2022. ScaleStore: A Fast

and Cost-Ecient Storage Engine using DRAM, NVMe, and RDMA. In

Proceedings of the 2022 International Conference on Management of Data

(SIGMOD ’22), June 12–17, 2022, Philadelphia, PA, USA. ACM, New York, NY,

USA, 15 pages. https://doi.org/10.1145/3514221.3526187

1 INTRODUCTION

In-memory DBMSs. Decades of decreasing main memory prices

have led to the era of in-memory DBMSs. This is reected by

the vast number of academic projects such as MonetDB [

8

], H-

Store [

34

], and HyPer [

37

] as well as commercially-available in-

memory DBMSs such as SAP HANA [

24

], Oracle Exalytics [

26

],

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for prot or commercial advantage and that copies bear this notice and the full citation

on the rst page. Copyrights for components of this work owned by others than the

author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or

republish, to post on servers or to redistribute to lists, requires prior specic permission

and/or a fee. Request permissions from permissions@acm.org.

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

© 2022 Copyright held by the owner/author(s). Publication rights licensed to ACM.

ACM ISBN 978-1-4503-9249-5/22/06.. .$15.00

https://doi.org/10.1145/3514221.3526187

Table 1: Hardware landscape in terms of cost, latency, BW.

Price

[$/TB]

Read Latency

[𝜇s/4 KB]

Bandwidth

[GB/s]

DRAM 5000 0.1 92.0

Flash SSDs 200 78.0 12.5

RDMA (IB EDR 4x) - 5.0 11.2

and Microsoft Hekaton [

19

]. However, while in-memory DBMSs

are certainly ecient, they also suer from signicant downsides.

Downsides of in-memory DBMSs. An inherent issue of in-

memory DBMSs is that all data must be memory resident. In turn,

this means if data sets grow, larger memory capacities are required.

Unfortunately, DRAM module prices do not increase linearly with

the capacity, for instance, a 64 GB DRAM module is 7 times more

expensive than a 16 GB module [

1

]. Therefore, scaling data beyond

a certain size results in an “explosion” of the hardware cost. More

importantly, since 2012 main memory prices have started to stag-

nate [

27

] – while data set sizes are constantly growing. This is why

research proposed two directions to handle very large data sets.

NVMe storage engines. As a rst direction, a new class of

storage engines [

38

,

51

] has been presented that can leverage NVMe

SSDs (ash) to store (cold) data. As Table 1 (second row) shows,

the price per terabyte of SSD storage is about 25 times cheaper

than the price of main memory. The key idea behind such high

performance storage engines is to redesign buer managers to

cause only minimal overhead on modern hardware in case pages

are cached in memory. This is in stark contrast to a classical buer

manager that suers from high overhead even if data is cache

resident [

28

]. Recent papers [

38

,

51

] have shown that when the

entire working set (aka hot set) ts into memory, the performance

of such storage engines is comparable to pure in-memory DBMSs.

Unfortunately, when the working set is considerably larger than the

memory capacities, the system performance signicantly degrades.

This is because the latency of SSDs is still at least two orders of

magnitude higher than DRAM (see Table 1 second row). This latency

cli mainly aects latency-critical workloads such as OLTP.

In-memory scale-out systems. A second (alternative) direc-

tion to accommodate large data sets is to use scale-out (distributed)

in-memory DBMS designs on top of fast RDMA-capable networks [

7

,

20

,

33

,

43

,

53

]. The main intuition is to scale in-memory DBMSs

beyond the capacities of a single machine by leveraging the aggre-

gated memory capacity of multiple machines. This avoids the cost

explosion that typically arises in scale-up designs. The main obser-

vation is that scale-out systems execute latency-critical transactions

eciently via RDMA. In fact, as shown in Table 1 (third row), the

latency of remote memory access using a recent InniBand net-

work (EDR 4

×

) is one order of magnitude lower than NVMe latency.

As a result, systems such as FaRM [

20

,

21

,

56

] and NAM-DB [

67

]

Session 10: Distributed and Parallel Databases

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

685

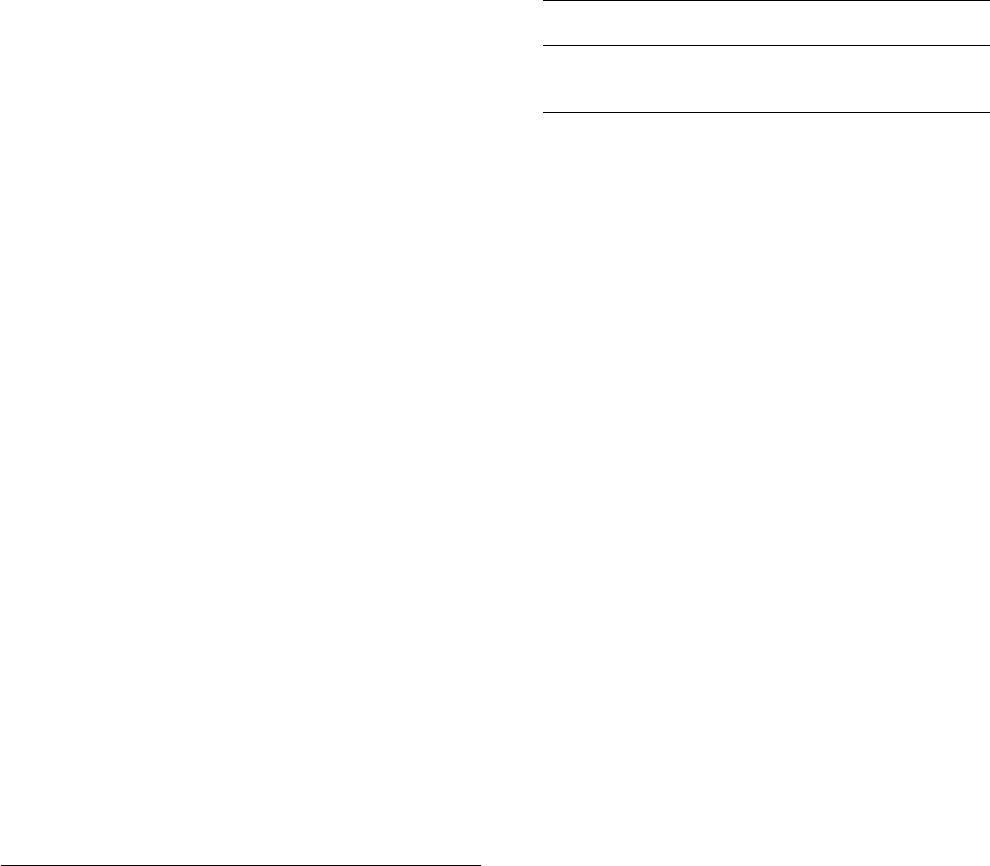

Placement

P3 P4 P5

P1

P2

Node 0 Node 1 Node 2

P4

P2

P5

Memory

SSD

P3

P1

II) intial placement

Node 0 Node 1 Node 2

P4

P2

P5

P3

P1

P4

P1

I) logical B-Tree

III) workload aware placement/ caching

(a) Logical B-Tree and placement

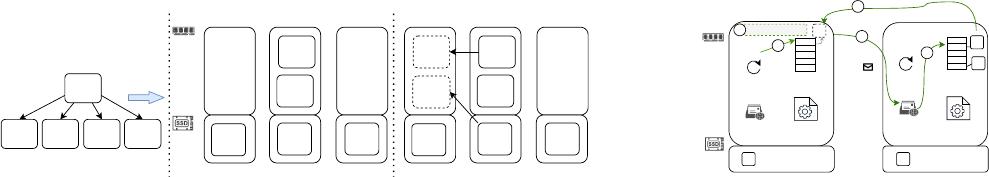

Protocol

message

P1

Memory

SSD

Node 0

P1

-

-

-

P1

Worker

Pool

Translation

Table

Message

Handler

Page

Provider

B-Tree lookup

Node 1

-

P1

-

-

P2

P2

Worker

Pool

Translation

Table

Message

Handler

Page

Provider

Transfer page

Page in

cache?

Find Page

1

2

3

4

5

... ...

P3 P4

P1

(b) ScaleStore’s Main Components

Figure 1: Overview of ScaleStore using a distributed B-Tree. (a) II The B-tree pages can be spread across lo cal/remote memor y

and SSDs. III The caching protocol optimizes how pages are laid out across machines. (b) ScaleStore’s main components.

provide high performance even for latency-sensitive OLTP work-

loads. However, such distributed in-memory DBMS designs still

require that all data must reside in the collective main memory of

the cluster. This causes unnecessarily high hardware costs when

the hot set is smaller than the complete data set.

ScaleStore. Given the two directions — single-node storage

engines and in-memory scale-out systems — the question remains if

one can combine the best of both worlds. In this work, we propose a

novel distributed storage engine called ScaleStore (code available

at [

15

]) that provides low-latency access to the aggregated memory

of all nodes via RDMA while seamlessly integrating SSDs to store

cold data. In contrast to single-node storage engines, this allows

ScaleStore to accommodate the hot set in the aggregated memory

to avoid the latency gap. However, unlike distributed in-memory

systems, ScaleStore evicts cold data to cost-ecient storage.

Challenges. While combining distributed memory with SSDs

appears intuitive, it triggers several non-trivial design questions: (1)

Deciding on the optimal data placement in ScaleStore is challeng-

ing due to the various storage locations (i.e., local memory, SSDs,

remote memory, and remote SSDs). Clearly, a naïve static allocation

scheme could be used in which the storage locations are determined

upfront (in an oine manner) to support a given workload. How-

ever, this prevents ecient support for shifting workloads where

the hot set is changing over time [

35

] or for elasticity which is a key

requirement for modern scalable DBMSs, especially in the cloud. (2)

Another challenge is to synchronize and coordinate data accesses.

Since data in ScaleStore is distributed across storage devices and

nodes it is non-trivial to achieve consistency eciently.

Distributed cache protocol. To address these challenges, as a

rst core contribution, ScaleStore implements a novel distributed

caching protocol based on RDMA that operates on xed-size pages.

Our distributed caching protocol provides transparent page access

across machines and storage devices. As such, worker threads can

access all pages as in a non-distributed system. Furthermore, an im-

portant aspect is that the distributed caching protocol dynamically

handles shifts in the workload. For example, if the access pattern

(i.e., which node requires which page) changes, the in-memory

cache is dynamically repopulated. This means that pages are mi-

grated from the cache of one node to another node. To enable high

performance for workloads with high access locality ScaleStore

dynamically caches frequently-accessed pages in DRAM on mul-

tiple nodes simultaneously. Finally, a last important aspect is that

the distributed caching protocol coordinates page accesses across a

cluster of nodes. This ensures a consistent view of the data despite

concurrent modications even when multiple copies are cached by

several nodes.

High performance eviction. Because ScaleStore caches pages

and handles workload shifts, the local DRAM buer may ll up at

very high rates, and thus unused (cold) pages have to be evicted

eciently. Existing strategies such as LRU or Second Chance are

either too slow or not accurate enough. ScaleStore, therefore,

employs a novel distributed high performance replacement strat-

egy to identify cold pages and evict them eciently. Importantly,

our eviction strategy is generally applicable to arbitrary data struc-

tures, rather than being hard-coded to any particular data structure,

which makes ScaleStore a general-purpose storage engine.

Easy-to-use programming abstraction. Despite being a com-

plex system, ScaleStore oers a programming model that allows

developers to implement distributed data structures in a simple

manner. Typically, creating distributed data structures such as dis-

tributed B-trees is a very complex and tedious task. For instance,

it is not uncommon for specialized RDMA data structures to have

thousands of lines of code [

73

], even with hard-coded caching rules

and no SSD support. The programming model of ScaleStore, in

contrast, hides all this complexity and makes distributed data struc-

ture design as easy as local data structure design.

2 SYSTEM OVERVIEW

In this section, we illustrate the main concept behind our system

using a motivating example and introduce the main components.

2.1 A Motivating Example

In ScaleStore, page access is transparent: any node can access

any page using its page identier (PID) and the system takes care

of page placement. Consider the B-tree as shown in Figure 1(a)

I, which consists of a root page (P1) and four leaf pages (P2-P5).

Figure 1(a) II illustrates how the pages might initially be distributed

across a cluster of three nodes: Pages P1 and P2 are cached by Node

1, while pages P3, P4, and P5 are not cached and only reside on the

SSDs. P1 and P2 also have a copy on SSDs, but for brevity these

pages are not shown. Note that this placement is only a snapshot,

and during operation, ScaleStore dynamically re-adjusts which

pages are cached based on the workload. For example, as Figure 1(a)

III shows, if Node 0 performs a lookup that involves pages P1 and

P4, it will replicate P1 from the remote main memory of Node

1 and P4 from the remote SSD of Node 1. Note that Node 1 did

not automatically cache P4. At this point, P1 will be cached by

Session 10: Distributed and Parallel Databases

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

686

of 15

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论