SIGMOD2022_CompressDB:Enabling Efficient Compressed Data Direct Processing for Various Databases.pdf

免费下载

CompressDB: Enabling Eicient Compressed Data Direct

Processing for Various Databases

Feng Zhang

⋄

, Weitao Wan

⋄

, Chenyang Zhang

⋄

, Jidong Zhai

★

, Yunpeng Chai

⋄

, Haixiang Li

+

,

Xiaoyong Du

⋄

⋄

Key Laboratory of Data Engineering and Knowledge Engineering (MOE), and School of Information,

Renmin University of China

★

Department of Computer Science and Technology, Tsinghua University

+

Tencent Inc., China

fengzhang@ruc.edu.cn,wanweitao@ruc.edu.cn,chenyangzhang@ruc.edu.cn,zhaijidong@tsinghua.edu.cn

ypchai@ruc.edu.cn,blueseali@tencent.com,duyong@ruc.edu.cn

ABSTRACT

In modern data management systems, directly performing opera-

tions on compressed data has been proven to be a big success facing

big data problems. These systems have demonstrated signicant

compression benets and performance improvement for data ana-

lytics applications. However, current systems only focus on data

queries, while a complete big data system must support both data

query and data manipulation.

We develop a new storage engine, called CompressDB, which

can support data processing for databases without decompression.

CompressDB has the following advantages. First, CompressDB uti-

lizes context-free grammar to compress data, and supports both

data query and data manipulation. Second, for adaptability, we inte-

grate CompressDB to le systems so that a wide range of databases

can directly use CompressDB without any change. Third, we enable

operation pushdown to storage so that we can perform data query

and manipulation in storage systems without bringing large data

to memory for high eciency.

We validate the ecacy of CompressDB supporting various kinds

of database systems, including SQLite, LevelDB, MongoDB, and

ClickHouse. We evaluate our method using six real-world datasets

with various lengths, structures, and content in both single node

and cluster environments. Experiments show that CompressDB

achieves 40% throughput improvement and 44% latency reduction,

along with 1.81 compression ratio on average.

CCS CONCEPTS

• Information systems

→

Data compression; Database man-

agement system engines; • Theory of computation

→

Data

compression.

KEYWORDS

compression, compressed data direct processing, database systems

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for prot or commercial advantage and that copies bear this notice and the full citation

on the rst page. Copyrights for components of this work owned by others than ACM

must be honored. Abstracting with credit is permitted. To copy otherwise, or republish,

to post on servers or to redistribute to lists, requires prior specic permission and/or a

fee. Request permissions from permissions@acm.org.

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

© 2022 Association for Computing Machinery.

ACM ISBN 978-1-4503-9249-5/22/06.. . $15.00

https://doi.org/10.1145/3514221.3526130

ACM Reference Format:

Feng Zhang

⋄

, Weitao Wan

⋄

, Chenyang Zhang

⋄

, Jidong Zhai

★

, Yunpeng

Chai

⋄

, Haixiang Li

+

, Xiaoyong Du

⋄

. 2022. CompressDB: Enabling Ecient

Compressed Data Direct Processing for Various Databases. In Proceedings

of the 2022 International Conference on Management of Data (SIGMOD ’22),

June 12–17, 2022, Philadelphia, PA, USA. ACM, New York, NY, USA, 15 pages.

https://doi.org/10.1145/3514221.3526130

1 INTRODUCTION

Modern big data systems are facing an exponentially growing data

volume and use data compression to reduce the storage footprint. To

avoid the overhead of constant compression and decompression op-

erations, existing research systems have explored the idea of directly

performing big data operations on compressed data [

13

,

72

,

98

–

103

,

105

]. These systems have demonstrated signicant compres-

sion ratio and performance improvement at the same time for data

analytics applications.

While existing solutions have shown great potential on read-only

query processing, a feature-complete big data system must support

both data query and data manipulation. In particular, a system must

support updates of random records as well as insertion and deletion

of records. Previous solutions, however, do not natively support

these functionalities, and thus must decompress and re-compress a

relatively large chunk of data each time modication is incurred,

leading to signicant performance overhead.

In this paper, we strive to ll in the missing piece by developing

a highly ecient technique to support updates, inserts, and deletes

directly on compressed data, thus enabling a space-ecient big

data system that supports both data query and manipulation. This

is a challenging task because existing compression technologies are

mostly optimized for compression ratio or read operations; the data

structures used for compressed data are not amenable to modica-

tions. For example, Succinct [

13

] is a database supporting queries

over compressed data; the compression technique is based on index

and sux array [

13

,

21

,

26

,

30

,

43

,

68

] where compressed elements

are dependent on each other, making it extremely inecient if a

small unit of data needs updates. Another example is TADOC (text

analytics directly on compression) [101, 102, 105], which achieves

a similar goal as Succinct but uses a rule-based compression strat-

egy. TADOC uses a rule to represent content that appears multiple

times.

We develop a new storage engine, called CompressDB, which

can support both data query and data manipulation directly on

Session 23: Storage and Indexing

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

1655

compressed data and can support various database systems. We

make a key observation that rule-based compression method is

suitable for data manipulation if the depth

1

of the DAG of rules is

limited to a shallow degree. Specically, CompressDB adopts rule-

based compression and limits its rule generation depth. Meanwhile,

CompressDB can compress and manipulate data in real time by

operating grammatical rules. Compared with the previous rule-

based compression targeting data analytics [

105

], we develop a

series of novel designs: In element level, we propose a new data

structure of data holes within rules. In rule level, we enable ecient

rule positioning and rule split for random update. In DAG level, we

reduce the depth of rule organization for eciency. By leveraging

new data structures and algorithm designs, CompressDB is highly

ecient in data manipulation without decompression, which has

not been supported by previous compression-based systems.

To enable CompressDB to seamlessly support various databases,

we develop CompressDB in le systems. At the le system layer,

CompressDB can handle system calls like read and write, as they can

be reimplemented with operations like extract, replace, append, etc.

Accordingly, CompressDB can support dierent types of database

systems that run on the le systems (e.g., SQLite, MySQL, MongoDB,

etc). These database systems rely on the system calls handled by

CompressDB. Thus, various data types (e.g., integer, oat, string,

etc.) and operations (e.g., join, select, insert, etc.) of database systems

can be supported by CompressDB. In addition, we develop more

general operations for CompressDB that are not supported by the

le system, such as insert and delete. Because these operations do

not have corresponding POSIX interface, we also provide a separate

set of APIs, which can be used eciently.

We validate the ecacy of CompressDB by supporting various

kinds of database systems, including SQLite [

10

], LevelDB [

37

],

MongoDB [

8

], and ClickHouse [

5

]. We evaluate our method using

six real-world datasets with various lengths, structures, and content

in both single node and cluster environments. We use a ve-node

cluster in the cloud with MooseFS [

9

], a high-performance network

distributed le system. MooseFS spreads data on cloud and provides

high-throughput accesses to data. Compared to the original baseline

of MooseFS, our method achieves 40% throughput improvement,

44% latency reduction, and 1.81 compression ratio on average, which

proves the eectiveness and eciency of our method. The paper

makes the following key contributions:

•

We develop ecient data manipulation operations, such as

insert, delete, and update, directly on compressed data. Along

with previous random access support, we enable both data

query and data manipulation.

•

We develop CompressDB, a storage engine that is integrated

into le systems. CompressDB can support various database

systems seamlessly without modifying the databases.

•

We enable operation pushdown to storage systems, which

avoids unnecessary data movement between memory and

disks, thus improving processing eciency on compressed

data.

1

We dene the depth of a DAG as the maximal length of a directed path from the root

to leaves.

2 PRELIMINARY

After a quick scan at these algorithms, we nd that grammar-based

compression [

64

,

65

,

85

] is naturally suitable for random update

directly on compressed data. We use TADOC [

101

], a representative

rule based compression, for illustration.

2.1 Rule-Based Compression

TADOC is a novel rule-based solution for compression-based direct

processing [

101

–

105

], which can be explained from three levels:

elements, rules, and DAG.

•

Element: The smallest indivisible minimum processing unit. An

element can be either a rule or a data unit such as a word from

the original le.

•

Rule: String of elements. TADOC uses a rule to represent re-

peated content, and a rule consists of subrules and data units.

•

DAG: The rule-compressed representation. The relations between

dierent rules can be organized as a directed acyclic graph

(DAG).

Such a rule-based representation is much smaller than the origi-

nal data. With this method, TADOC recursively represents pieces

of input data into a hierarchically compressed form, which is of

great benets for analytics over compressed data, since a rule can

always be restored freely by explaining its elements, regardless of

the context in which the rule appears.

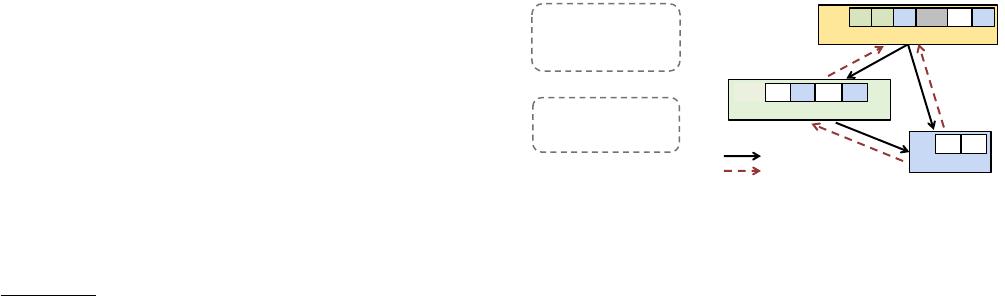

Rule compression. Rule expression in TADOC is a kind of

compression, because each rule represents a repeated data segment

in the input. In Figure 1 (a), we show the original input, which

has two les. The symbol

𝑤

𝑖

can represent a minimal data unit,

which can be a word, a character, or a data segment. TADOC can

transform the input to a set of rules, as shown in Figure 1 (b). In

Figure 1 (b),

𝑅𝑖

represents a rule.

𝑅

0 does not have in-edges, so

we also call

𝑅

0 the root. To utilize the redundancy between les,

TADOC can compress dierent les together with a le boundary

𝑠𝑝𝑡

𝑖

inserted in

𝑅

0. For example,

𝑠𝑝𝑡

1 in

𝑅

0 is used to indicate

that the rst le is represented as “

𝑅

1

, 𝑅

1

, 𝑅

2” while the second le

“

𝑤

2

, 𝑅

2”. Such a representation is lossless compression. To restore

data, we can recursively restore the rules from

𝑅

0. For example, to

restore

𝑓 𝑖𝑙𝑒𝐴

, we need to restore

𝑅

1 with

𝑅

2 before restoring

𝑅

0,

which is “

𝑤

1

, 𝑤

2

, 𝑤

3

, 𝑤

4

, 𝑤

2

, 𝑤

3”. The relations between the rules

can be represented as a DAG, as shown in Figure 1 (c).

R0: R1 R1 R2w2

R1:

R2: w2 w3

<w1,2>, <w2,7>, <w3,6>, <w4,2>

Rule Relation

Information Propagation

spt1

Step 1

Step 2

Step 3

<w2,1>, <w3,1>

<w1,1>, <w2,2>, <w3,2>, <w4,1>

<w2,1>, <w3,1>

<w1,1>, <w2,2>,

<w3,2>, <w4,1>

R2w1 R2w4

R2

file A: w1 w2 w3 w4 w2 w3

w1 w2 w3 w4 w2 w3

w2 w3

file B: w2 w2 w3

(a) Original input.

(b) Rule representation.

R0 : R1 R1 R2 spt1 w2 R2

R1 : w1 R2 w4 R2

R2 : w2 w3

(c) DAG organization.

Figure 1: An example of TADOC compression.

Data analytics. Based on the rule representation, we can per-

form common data analytics directly on the rule compressed data [

103

].

In detail, TADOC can convert the analytics task to a DAG traversal

problem with rule interpretation. We use word count as an example

Session 23: Storage and Indexing

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

1656

of 15

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论