PolarDB Serverless:A Cloud Native Database for Disaggregated Data Centers - Wei Cao.pdf

免费下载

PolarDB Ser verless: A Cloud Native Database for Disaggregated

Data Centers

Wei Cao

†,‡

, Yingqiang Zhang

‡

, Xinjun Yang

‡

, Feifei Li

‡

, Sheng Wang

‡

, Qingda Hu

‡

Xuntao Cheng

‡

, Zongzhi Chen

‡

, Zhenjun Liu

‡

, Jing Fang

‡

, Bo Wang

‡

, Yuhui Wang

‡

Haiqing Sun

‡

, Ze Yang

‡

, Zhushi Cheng

‡

, Sen Chen

‡

, Jian Wu

‡

, Wei Hu

‡

, Jianwei Zhao

‡

Yusong Gao

‡

, Songlu Cai

‡

, Yunyang Zhang

‡

, Jiawang Tong

‡

mingsong.cw@alibaba-inc.com

†

Zhejiang University and

‡

Alibaba Group

ABSTRACT

The trend in the DBMS market is to migrate to the cloud for elas-

ticity, high availability, and lower costs. The traditional, monolithic

database architecture is dicult to meet these requirements. With

the development of high-speed network and new memory technolo-

gies, disaggregated data center has become a reality: it decouples

various components from monolithic servers into separated re-

source pools (e.g., compute, memory, and storage) and connects

them through a high-speed network. The next generation cloud

native databases should be designed for disaggregated data centers.

In this paper, we describe the novel architecture of PolarDB

Serverless, which follows the disaggregation design paradigm: the

CPU resource on compute nodes is decoupled from remote mem-

ory pool and storage pool. Each resource pool grows or shrinks

independently, providing on-demand provisoning at multiple di-

mensions while improving reliability. We also design our system to

mitigate the inherent penalty brought by resource disaggregation,

and introduce optimizations such as optimistic locking and index

awared prefetching. Compared to the architecture that uses local

resources, PolarDB Serverless achieves better dynamic resource pro-

visioning capabilities and 5.3 times faster failure recovery speed,

while achieving comparable performance.

CCS CONCEPTS

• Information systems → Data management systems

;

• Net-

works → Cloud computing.

KEYWORDS

cloud database; disaggregated data center; shared remote memory;

shared storage

ACM Reference Format:

Wei Cao

†, ‡

, Yingqiang Zhang

‡

, Xinjun Yang

‡

, Feifei Li

‡

, Sheng Wang

‡

,

Qingda Hu

‡

, Xuntao Cheng

‡

, Zongzhi Chen

‡

, Zhenjun Liu

‡

, Jing Fang

‡

, Bo

Wang

‡

, Yuhui Wang

‡

, Haiqing Sun

‡

, Ze Yang

‡

, Zhushi Cheng

‡

, Sen Chen

‡

,

Jian Wu

‡

, Wei Hu

‡

, Jianwei Zhao

‡

, and Yusong Gao

‡

, Songlu Cai

‡

, Yunyang

Zhang

‡

, Jiawang Tong

‡

. 2021. PolarDB Serverless: A Cloud Native Database

Permission to make digital or hard copies of part or all of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for prot or commercial advantage and that copies bear this notice and the full citation

on the rst page. Copyrights for third-party components of this work must be honored.

For all other uses, contact the owner/author(s).

SIGMOD ’21, June 20–25, 2021, Virtual Event, China

© 2021 Copyright held by the owner/author(s).

ACM ISBN 978-1-4503-8343-1/21/06.

https://doi.org/10.1145/3448016.3457560

for Disaggregated Data Centers. In Proceedings of the 2021 International

Conference on Management of Data (SIGMOD ’21), June 20–25, 2021, Virtual

Event, China. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/

3448016.3457560

1 INTRODUCTION

As enterprises move their applications to the cloud, they are also

migrating their databases to the cloud. The driving force of this

trend is threefold. First, cloud vendors provide the “pay-as-you-go”

model that allows customers to avoid paying for over-provisioned

resources, resulting in signicant cost reduction. Second, marketing

activities such as Black Friday and Singles’ Day often demand

rapid but transient resource expansion from the database systems

during peak time, where cloud vendors can oer such elasticity

to customers. Third, cloud vendors are able to quickly upgrade

and evolve the database systems to maintain competitiveness and

repair defects in time while sustaining high availability. Customers

always expect that node failures, especially planned downtime and

software upgrades, will have less impact on their business.

Cloud vendors such as AWS [

43

], Azure [

2

], GCP and Alibaba [

9

]

provide relational database as a services (DBaaS). There are three

typical architectures for cloud databases: monolithic machine (Fig-

ure 1), virtual machine with remote disk (Figure 2(a)), and shared

storage (Figure 2(b)), and the last two can be referred as separation of

compute and storage. Though these architectures have been widely

used, they all suer from challenges caused by resource coupling.

Under the monolithic machine architecture, all resources (such

as CPU, memory and storage) are tightly coupled. The DBaaS plat-

form needs to solve bin-packing problems when assigning database

instances to machines. It is dicult to make dierent resources

allocated on a physical machine all have a high utilization rate,

which is prone to fragmentation. Moreover, it is dicult to meet

the demands of customers to adjust individual resources exibly

according to the load at runtime. Finally, a system with tightly

coupled resources has the problem of fate sharing, i.e., the failure

of one resource will cause the failure of other resources. Resources

cannot be recovered independently and transparently, which leads

to longer system recovery time.

With the separation of compute and storage architecture, DBaaS

can independently improve the resource utilization of the storage

pool. The shared storage subtype further reduces storage costs —

the primary and read replicas can attach and share the same storage.

Read replicas help to serve high volume read trac and ooad ana-

lytical queries from the primary. However, in all these architectures,

Replication

Monolithic Server

CPU

Memory

Storage

Monolithic Server

CPU

Memory

Storage

Figure 1: monolithic machine

Replication

Compute Node

CPU

Memory

Compute Node

CPU

Memory

Storage

Storage Node

Storage

Storage Node

Network

Storage Pool Storage Pool

(a) virtual machine with remote disk

Coordination

Compute Node

CPU

Memory

Compute Node

CPU

Memory

Storage

Storage Node

Storage Pool

Network

(b) shared storage

Figure 2: separation of compute and storage

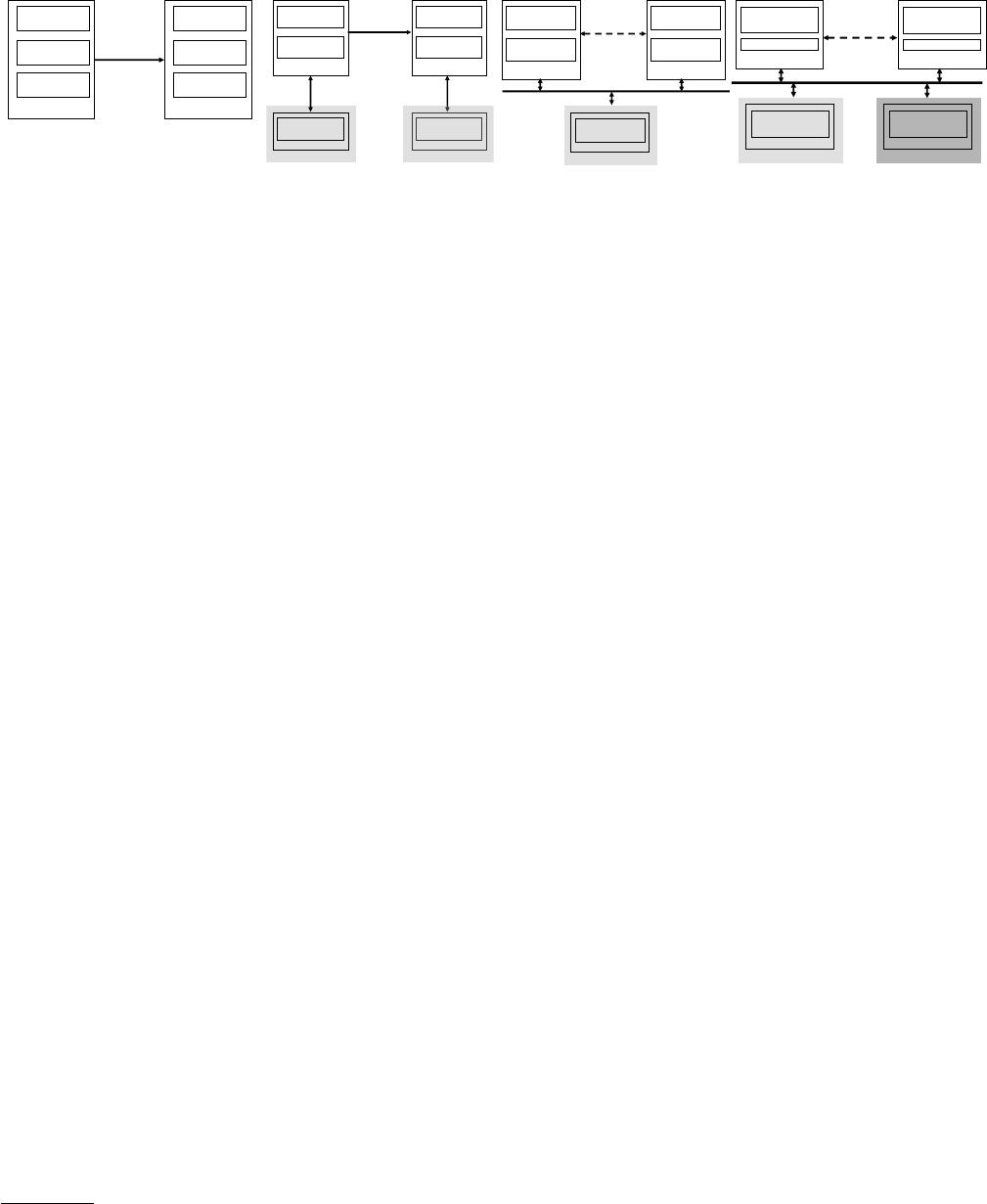

Coordination

Compute Node

Local Memory

CPU

Storage

Storage Node

Storage Pool

Memory

Memory Node

Memory Pool

Compute Node

Local Memory

CPU

Network

Figure 3: disaggregation

problems like bin-packing of CPU and memory, lacking of exible

and scalable memory resources, remain unsolved. Furthermore,

each read replica keeps a redundant in-memory data copy, leading

to high memory costs.

In this paper, we propose a novel cloud database design par-

adigm of the disaggregation architecture (Figure 3). It goes one

step further than the shared storage architecture, to address the

aforementioned problems. The disaggregation architecture runs

in the disaggregated data centers (DDC), in which CPU, memory

and storage resources are no longer tightly coupled as in a mono-

lithic machine. Resources are located in dierent nodes connected

through high-speed network. As a result, each resource type im-

proves its utilization rate and expands its volume independently.

This also eliminates fate sharing, allowing each resource be re-

covered from failure and upgraded independently. Moreover, data

pages in the remote memory pool can be shared among multiple

database processes, analogous to the storage pool being shared

in shared storage architecture. Adding a read replica no longer in-

creases the cost of memory resources, except for consuming a small

piece of local memory.

A trend in recent years is that cloud-native database vendors are

launching serverless variants [

3

,

4

]. The main feature of serverless

databases is on-demand resource provisioning (such as auto-scaling

and auto-pause), which should be transparent and seamless without

interrupting customer workloads. Most cloud-native databases are

implemented based on the shared storage architecture, where CPU

and memory resources are coupled and must be scaled at the same

time. In addition, auto-pause has to release both resources, resulting

in long resumption time. We show that disaggregation architecture

can overcome these limitations.

PolarDB Serverless is a cloud-native database implementation

that follows the disaggregation architecture. Similar to major cloud-

native database products like Aurora, HyperScale, and PolarDB

1

,

it includes one primary (RW node) and multiple read replicas (RO

nodes) in the database node layer. With the disaggregation architec-

ture, it is possible to support multiple primaries (RW nodes), but

this is not within the scope of this paper.

The design of a multi-tenant scale-out memory pool is intro-

duced in PolarDB Serverless, including page allocation and life cycle

management. The rst challenge is to ensure that the system exe-

cutes transactions

correctly

after adding remote memory to the

system. For example, read after write should not miss any updates

even across nodes. We realize it using cache invalidation. When

1

PolarDB Serverless is developed on a fork of PolarDB’s codebase.

RW is splitting or merging a B+Tree index, other RO nodes should

not see an inconsistent B-tree structure in the middle. We protect

it with global page latches. When a RO node performs read-only

transactions, it must avoid reading anything written by uncommit-

ted transactions. We achieve it through the synchronization of read

views between database nodes.

The evolution of the disaggregation architecture could have a

negative impact on the database performance. It is because the

data is likely to be accessed from the remote, which introduces

signicant network latency. The second challenge is to execute

transactions

eciently

. We exploit RDMA optimization exten-

sively, especially one-sided RDMA verbs, including using RDMA

CAS [

42

] to optimize the acquisition of global latches. In order

to improve concurrency, both RW and RO use optimistic locking

techniques to avoid unnecessary global latches. On the storage side,

page materialization ooading allows dirty pages to be evicted

from remote memory without ushing them to the storage, while

index-aware prefetching improve query performance.

The disaggregation architecture complicates the system and

hence increases the variety and probability of system failures. As a

cloud database service, the third challenge is to build a

reliable

sys-

tem, we summarize our strategies to handle single-node crashes of

dierent node types which guarantee that there is no single-point

failure in the system. Because the states in memory and storage are

decoupled from the database node, crash recovery time of the RW

node becomes 5.3 times faster than that in the monolithic machine

architecture.

We summarize our main contributions as follows:

•

We propose the disaggregation architecture and present the

design of PolarDB Serverless, which is the rst cloud database

implementation following the architecture. We demonstrate

that this architecture provides new opportunities for the

design of new cloud-native and serverless databases.

•

We provide design details and optimizations that make the

system work correctly and eciently, overcoming the perfor-

mance drawbacks brought by the disaggregation architecture.

•

We describe our fault tolerance strategies, including the

handling of single-point failures and cluster failures.

The remainder of this paper is organized as follows. In Section 2,

we introduce backgrounds of PolarDB and DDC. Section 3 explains

the design of PolarDB Serverless. Section 4 presents our performance

optimizations. Section 5 discusses our fault tolerance and recov-

ery strategies. Section 6 gives the experimental results. Section 7

reviews the related work, and Section 8 concludes the paper.

of 13

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论