RAT-SQL-TC Pay More Attention to History- A Context Modelling Strategy for Conversational Text-to-SQL.pdf

免费下载

Pay More Attention to History: A Context Modelling Strategy for

Conversational Text-to-SQL

Yuntao Li

1

, Hanchu Zhang

2

, Yutian Li

2

, Sirui Wang

2

, Wei Wu

2

, Yan Zhang

1

1

Peking University

2

Meituan

{li.yt, zhyzhy001}@pku.edu.cn, {zhanghanchu, liyutian, wangsirui, wuwei30}@meituan.com

Abstract

Conversational text-to-SQL aims at converting multi-turn natu-

ral language queries into their corresponding SQL (Structured

Query Language) representations. One of the most intractable

problems of conversational text-to-SQL is modelling the se-

mantics of multi-turn queries and gathering the proper infor-

mation required for the current query. This paper shows that

explicitly modelling the semantic changes by adding each turn

and the summarization of the whole context can bring better

performance on converting conversational queries into SQLs.

In particular, we propose two conversational modelling tasks in

both turn grain and conversation grain. These two tasks simply

work as auxiliary training tasks to help with multi-turn conver-

sational semantic parsing. We conducted empirical studies and

achieved new state-of-the-art results on the large-scale open-

domain conversational text-to-SQL dataset. The results demon-

strate that the proposed mechanism significantly improves the

performance of multi-turn semantic parsing.

1

Index Terms: conversational text-to-sql, human-computer in-

teraction, computational paralinguistics

1. Introduction

Semantic parsing is a task that maps natural language queries

into corresponding machine-executable logical forms. Being

one of the most popular branches of semantic parsing, text-

to-SQL, which relieves real users from the burden of learning

about techniques behind the queries, has drawn quantities of

attention in the field of natural language processing. Existing

work mainly focused on converting individual utterances into

SQL (Structured Query Language) queries. However, in real

scenarios, users tend to interact with systems through conversa-

tions to acquire information, in which the conversation context

should be considered. To meet this users’ demand, the attention

of research on single-turn text-to-SQL shifted to conversational

text-to-SQL.

Conversational text-to-SQL is an extension of the standard

text-to-SQL task, which frees the restriction of natural language

queries from single-turn settings into multi-turn settings. Re-

cent studies [1, 2, 3] indicate that conversational text-to-SQL

shows much higher difficulty compared with single-turn text-

to-SQL. This kind of difficulty mainly comes from modelling

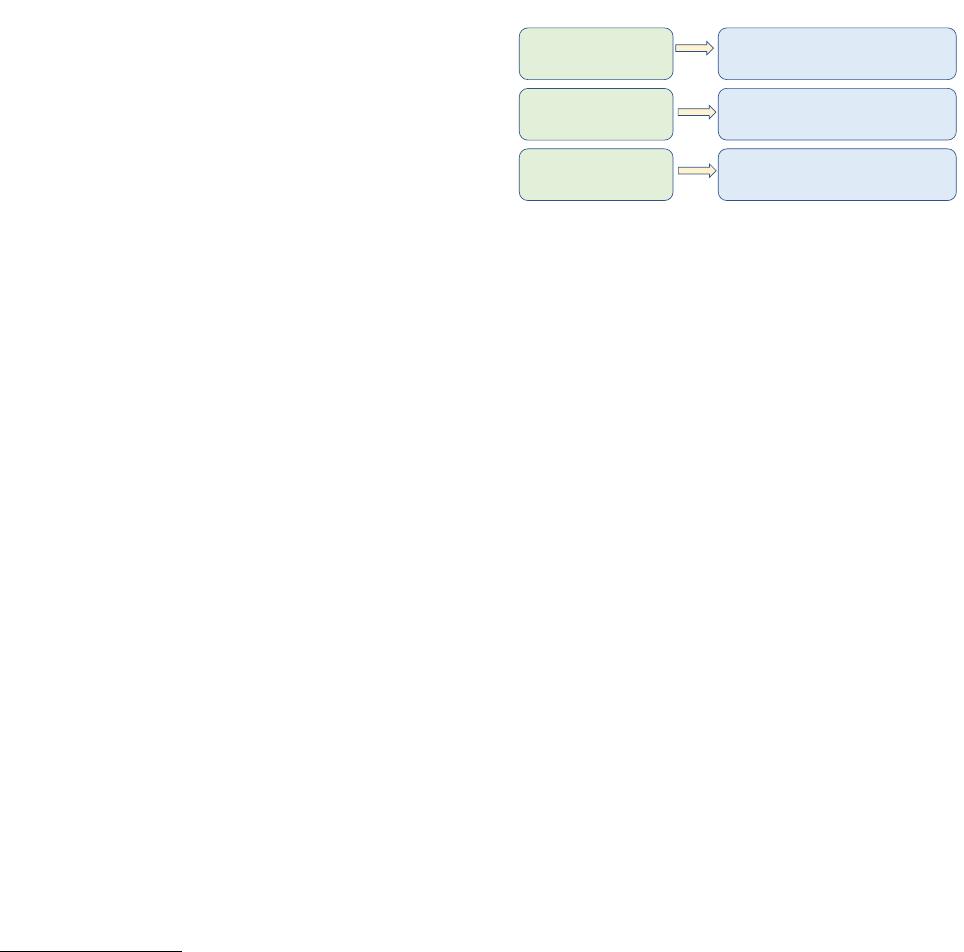

multi-turn natural language queries. Figure 1 shows an exam-

ple of conversational semantic parsing. Three utterances appear

in this conversation. The second query is asked according to the

first query, and the SQL of the second turn is a modification of

the first one by adding an additional restriction. The third query

1

Our code is publicly available at https://github.com/JuruoMP/RAT-

SQL-TC.

changes the selected columns based on the second query, which

results in modification of the selected columns of the SQL.

What countries are in North

America?

SELECT * FROM country WHERE Continent =

"North America"

Of those, which have

surface area greater than

3000?

What is the total population

and average surface area of

those countries?

SELECT * FROM country WHERE Continent =

"North America" AND SurfaceArea > 3000

SELECT sum(Population) , avg(SurfaceArea)

FROM country WHERE Continent = "North

America" AND SurfaceArea > 3000

Conversational Queries SQLs

Figure 1: A conversation with three queries. The semantics of

latter turns depends on previous turns, and the corresponding

SQLs can be regarded as a modification of the previous ones.

It can be observed from the example that to better under-

stand a contextual query and generate a corresponding SQL, it

is essential to model both the semantics changes by adding each

separate turn, as well as mapping those changes into the SQL

operations. On the one hand, modelling the semantic changes

by adding every single turn is conducive to better understanding

the semantic flow during a conversation, and thus helps to better

summarise them into a single SQL. On the other hand, in order

to generate correct predicted SQLs, it is vital to correlate those

semantic changes with database schema operations.

Motivated by these observations, in this paper, we pro-

pose RAT-SQL-TC, which uses two auxiliary tasks to better

modelling multi-turn conversational context and generating cor-

rect SQL representations based on RAT-SQL[4]. The first

task is Turn Switch Prediction (TSP), which predicts how SQL

changes while adding a new turn during a conversation. And

the second task is Contextual Schema Prediction (CSP), which

helps with mapping the contextual changes to database schema

operations. CSP requires the utterance encoder model to predict

the changes of usage of each column w.r.t the current turn of a

conversation. CSP also enhances the encoder model to make

a better understanding of database schemas. These two tasks

work as auxiliary tasks of multi-task learning that are trained

together with the SQL generation task. Our proposed two tasks

work from a natural-language-understanding perspective and a

database-schema-aware perspective respectively, to enhance the

understanding of conversation context and further promote text-

to-SQL generation.

We evaluate our proposed method on a popular large-

scale cross-domain conversational text-to-SQL benchmarks,

i.e., SParC [1]. By adding our mechanisms, the accuracy of both

query match and interaction match is significantly improved

arXiv:2112.08735v2 [cs.CL] 26 Jul 2022

against baseline methods. We also achieve new state-of-the-art

results on the leaderboard at the time of writing this paper.

Our proposed mechanisms show advantages in the follow-

ing aspects. (1) TSP and CSP work from a natural-language-

understanding perspective and a database-schema-aware per-

spective on better modelling conversational context. (2) Our

proposed method works as auxiliary tasks of multi-task learn-

ing, which avoids troublesome synthetic conversational data

collection and extensive computational costs compared with

pre-training methods. (3) We boost baseline methods signifi-

cantly and achieve new state-of-the-art results on a large-scale

cross-domain benchmark.

2. Related Work

2.1. Semantic Parsing and Text-to-SQL

Semantic parsing has been studied for a long period. Previous

semantic parsers are generally based on either expert-designed

rules [5, 6, 7] or statistical techniques [8, 9, 10]. In recent

years, neural semantic parsers come to the fore. Neural se-

mantic parsers generally treat semantic parsing as a sequence-

to-sequence task, and solve it with encoder-decoder frame-

work [11, 12, 13, 14, 15].

Text-to-SQL takes a large share of all semantic parsing

tasks. Previous text-to-SQL task mainly focus on relative-

simple in-domain text-to-SQL scenarios, and state-of-the-art

models show promising performance in this scenario [16, 17,

18]. Recently, a cross-domain multi-table text-to-SQL dataset

called Spider is proposed[19]. Compared with in-domain text-

to-SQL, cross-domain multi-table text-to-SQL requires mod-

els for higher ability of generalization on both natural lan-

guage and database schema understanding. On better solv-

ing this task, besides pure sequence-to-sequence methods, a

new skeleton-then-detail paradigm is proposed and widely ap-

plied. This paradigm generates a SQL skeleton first and then

fill the skeleton with database schema tokens. Models be-

long to this paradigm includes SQLNet [20], TypeSQL [21],

SQLova [22], Coarse2Fine [23], XSQL [24], HydraNet [25],

etc. Besides, some other strategies are proposed for enhancing

text-to-SQL parsers, including intermediate representation en-

hancement [26, 27, 28], reasoning through GNN model [29, 30,

4, 31, 32], and data augmentation [33, 34].

2.2. Conversational Text-to-SQL

Compared with single-turn text-to-SQL, conversational text-to-

SQL requires semantic parsers to understand the context of con-

versations to make correct SQL predictions. More recently, two

large-scale cross-domain benchmarks for conversational text-

to-SQL (i.e., SParC and CoSQL [1, 35]) are constructed, and

several studies are conducted based on these two benchmarks.

EditSQL [3] takes predicted SQL from the previous turn and

natural language utterance of the current turn as input, and ed-

its the previous SQL according to the current turn to gener-

ate the newly predicted SQL. This method tends to fail when

users ask for a new question less related to the conversation

context. IGSQL [36] solves this problem by building graph

among database schema and turns of queries to model the con-

text consistency during a conversation. IST-SQL [37] borrows

the idea from dialogue state tracking and regards columns as

slots with their value being their usage. Those slot-value pairs

are stored to represent the dialogue state. R

2

SQL [38] intro-

duces a dynamic schema-linking graph network and several dy-

namic memory decay mechanisms to track dialogue states and

uses a reranker to filter out some easily-detected incorrect pre-

dicted SQLs. Yu et al. proposed a language model pre-training

method specified for conversational text-to-SQL and achieved

state-of-the-art results on both datasets named score [39]. How-

ever, this method requires quantities of synthesized conversa-

tional semantic parsing data and relative high training cost.

3. Problem Formalization

Conversational text-to-SQL is a task that maps multi-turn nat-

ural language queries u = [u

1

, u

2

, · · · , u

T

] into correspond-

ing SQL logical forms y = [y

1

, y

2

, · · · , y

T

] w.r.t a pre-defined

database schema s, where T is the number of turns of a con-

versation. A database schema s = [s

1

, s

2

, · · · , s

m

] indicates

for all tables and columns from a multi-table database, where

each s

i

represents a hTable, Columni pairs. The goal of neural

semantic parsers is to maximize the probability of predicting

correct SQL y

t

given all natural language turns before t, i.e.,

max

T

Y

t=1

P (y

t

|u

1,··· ,t

; s)

(1)

Different from single-turn semantic parsing, when parsing y

t

,

all utterance turns before the t-th turn, i.e., [u

1

, u

2

, · · · , u

t

],

should be considered.

4. Methodology

In this paper, we propose RAT-SQL-TC for conversational text-

to-SQL, which adds two auxiliary tasks into the widely applied

RAT-SQL. We will introduce the framework of our proposed

model and the proposed two tasks in the following sections.

4.1. Overview of RAT-SQL-TC

RAT-SQL is one of the state-of-the-art neural semantic parsers

in recent years [4]. RAT-SQL is a unified framework which

encodes both relational structure in the database schema and

the given question for SQL generation. We take the RAT-SQL

as the basis to build our model. Concretely, we use a relation-

aware transformer-based encoder model to encode a natural lan-

guage query into vectors, and use a decoder model to translate

the encoded vectors into an abstract syntax tree (AST). This

AST can be further converted into SQL.

Notate u = [u

1

, u

2

, · · · , u

T

] to be a sequential query with

T turns, and u

i

= [u

1

i

, u

2

i

, · · · , u

|u

i

|

i

] where u

j

i

is the j-th token

of the i-th query. Notate s = [s

1

, s

2

, · · · , s

M

] to be the corre-

sponding database schema with column names. We can obtain

the input of the encoder model by jointing each turn and each

column name. To be specified, we concatenate turns of queries

with a special token “hsi” to indicate the boundary of each turn,

and each column name is concatenated with another special to-

ken “h/si”. Then the combination of the query and the database

schema is fed into the encoder, as is shown in Figure 2. This

input sequence is processed by the transformer-based encoder

model similar to RAT-SQL and a set of encoder vectors is gen-

erated with the same length as the input sequence. We follow

the AST decoding paradigm of RAT-SQL and use a decoder to

generate predicted SQL according to those vectors, and the loss

of decoding is defined as

L

dec

=

|Y |

X

i=1

y

i

log P (y

i

|y

<i

, u; s),

(2)

of 5

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论