2022-06-01

aix 7.1 + oracle 11.2.0.4 rac ,节点2 主机自动重启原因寻找

100M

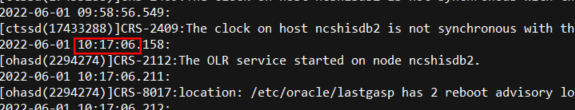

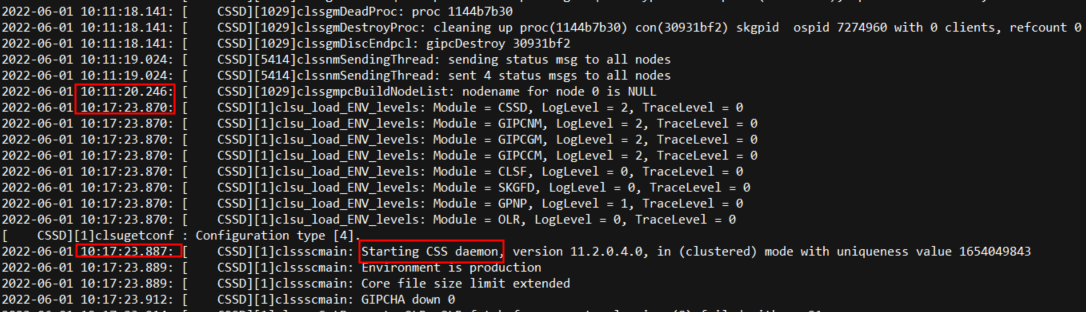

100M 节点2 32.161 在今天早上10:11发生了重启,节点2只能看到重启后日志,看不到重启前的。

线索1:

2022-06-01 10:11:59.193: [cssd(11207262)]CRS-1612:Network communication with node ncshisdb2 (2) missing for 50% of timeout interval. Removal of this node from cluster in 14.848 seconds

节点1有这个告警,我的理解是这个心跳不通是在节点2重启的过程中发生的

线索2:

11.2.0.2 开始,当出现以下情况的时候,集群件(GI)会重新启动集群管理软件,而不是将节点重启。

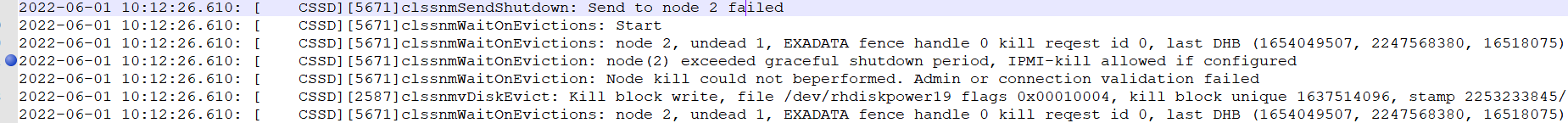

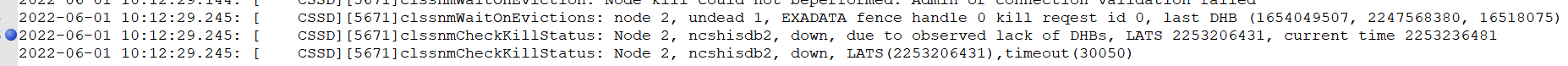

1.当某个节点连续丢失网络心跳超过misscount时。

2.当某个节点不能访问大多数表决盘(VF)时。

3.当member kill 被升级成为node kill的时候。

在之前的版本,以上情况,集群管理软件(CRS)会直接重启节点。

为啥我这个节点2直接机器重启,而不是节点重启,请高手解惑

收藏

分享

7条回答

默认

最新

回答交流

提交

问题信息

请登录之后查看

附件列表

请登录之后查看

邀请回答

暂无人订阅该标签,敬请期待~~

墨值悬赏

墨值悬赏

评论

评论