1、简述

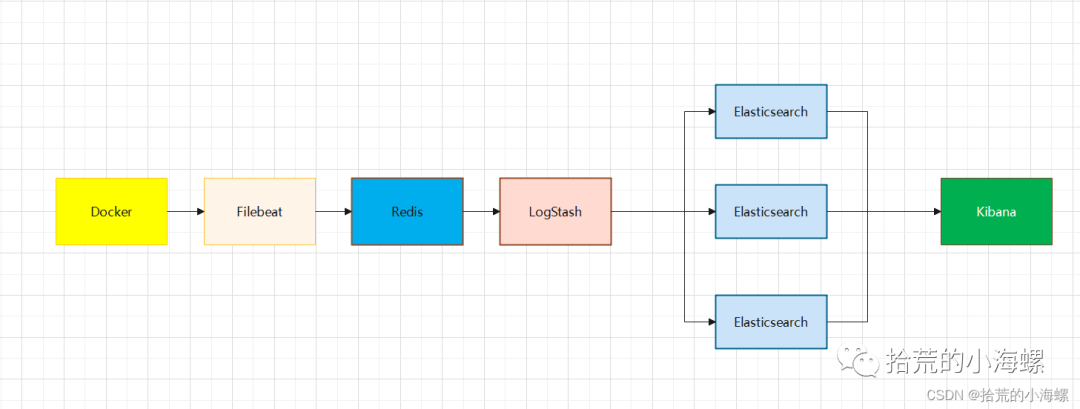

ELK作为一套日志收集工具,顾名思义,ELK包含了三个开源工具,它们分别是elasticsearch、logstash、kibana,其中elasticsearch是一款搜索和数据分析引擎,Logstash是免费且开放的服务器端数据处理管道,能够从多个来源采集数据,Kibana是一个免费且开放的用户界面,能够对Elasticsearch数据进行可视化。

ELK有多种架构可供部署,根据数据量大小、服务器需求等可选择不同架构,有些架构还需要用到消息队列中间件,比如kafka或者redis,用于进行数据持久化,防止日志数据丢失的这一情况。

2、Elasticsearch 集群

2.1 拉取镜像

安装elasticsearch,当前选择的是7.2.0版本的

docker pull elasticsearch:7.2.0

2.2 创建集群目录

创建es1,es2,es3集群目录:

[root@localhost ~]# mkdir -p /data/elk/es1/data[root@localhost ~]# chmod -R 777 /data/elk/es1/data[root@localhost ~]# mkdir -p /data/elk/es2/data[root@localhost ~]# chmod -R 777 /data/elk/es2/data[root@localhost ~]# mkdir -p /data/elk/es3/data[root@localhost ~]# chmod -R 777 data/elk/es3/data

2.3 配置

首先启动一个es:

docker run -d --name es --rm -e "discovery.type=single-node" -d elasticsearch:7.2.0

拷贝配置文件到es1目录:

docker cp es:/usr/share/elasticsearch/config data/elk/es1/config

停止当前es:

docker stop es

依次拷贝当前配置到es2,es3:

cp -a data/elk/es1/config data/elk/es2cp -a data/elk/es1/config data/elk/es3

配置es1的elasticsearch.yml:

cluster.name: elasticsearch-clusternode.name: es1network.bind_host: 0.0.0.0network.publish_host: 192.168.254.129http.port: 9200transport.tcp.port: 9300http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: truediscovery.zen.ping.unicast.hosts: ["192.168.254.129:9300","192.168.254.129:9301","192.168.254.129:9302"]discovery.zen.minimum_master_nodes: 2cluster.initial_master_nodes: ["es1"]xpack.security.enabled: falsexpack.security.authc.accept_default_password: falsexpack.security.transport.ssl.enabled: false#xpack.security.transport.ssl.verification_mode: certificate#xpack.security.transport.ssl.keystore.path: usr/share/elasticsearch/config/elastic-certificates.p12#xpack.security.transport.ssl.truststore.path: usr/share/elasticsearch/config/elastic-certificates.p12http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

配置es2的elasticsearch.yml:

cluster.name: elasticsearch-clusternode.name: es2network.bind_host: 0.0.0.0network.publish_host: 192.168.254.129http.port: 9201transport.tcp.port: 9301http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: truediscovery.zen.ping.unicast.hosts: ["192.168.254.129:9300","192.168.254.129:9301","192.168.254.129:9302"]discovery.zen.minimum_master_nodes: 2cluster.initial_master_nodes: ["es1"]xpack.security.enabled: falsexpack.security.authc.accept_default_password: falsexpack.security.transport.ssl.enabled: false#xpack.security.transport.ssl.verification_mode: certificate#xpack.security.transport.ssl.keystore.path: usr/share/elasticsearch/config/elastic-certificates.p12#xpack.security.transport.ssl.truststore.path: usr/share/elasticsearch/config/elastic-certificates.p12http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

配置es3的elasticsearch.yml:

cluster.name: elasticsearch-clusternode.name: es3network.bind_host: 0.0.0.0network.publish_host: 192.168.254.129http.port: 9202transport.tcp.port: 9302http.cors.enabled: truehttp.cors.allow-origin: "*"node.master: truenode.data: truediscovery.zen.ping.unicast.hosts: ["192.168.254.129:9300","192.168.254.129:9301","192.168.254.129:9302"]discovery.zen.minimum_master_nodes: 2cluster.initial_master_nodes: ["es1"]xpack.security.enabled: falsexpack.security.authc.accept_default_password: falsexpack.security.transport.ssl.enabled: false#xpack.security.transport.ssl.verification_mode: certificate#xpack.security.transport.ssl.keystore.path: usr/share/elasticsearch/config/elastic-certificates.p12#xpack.security.transport.ssl.truststore.path: usr/share/elasticsearch/config/elastic-certificates.p12http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

2.4 启动ES

启动es1容器:

docker run -d --name es1 -p 9200:9200 -p 9300:9300 \-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \-v data/elk/es1/config/:/usr/share/elasticsearch/config \-v data/elk/es1/data/:/usr/share/elasticsearch/data \-d elasticsearch:7.2.0

启动es2容器:

docker run -d --name es2 -p 9201:9201 -p 9301:9301 \-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \-v data/elk/es2/config/:/usr/share/elasticsearch/config \-v data/elk/es2/data/:/usr/share/elasticsearch/data \-d elasticsearch:7.2.0

启动es3容器:

docker run -d --name es3 -p 9202:9202 -p 9302:9302 \-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \-v data/elk/es3/config/:/usr/share/elasticsearch/config \-v data/elk/es3/data/:/usr/share/elasticsearch/data \-d elasticsearch:7.2.0

2.5 校验

查看安装日志:

docker logs -f --tail=300 es1

查看到问题:

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

彻底解决:

切换到root用户修改配置sysctl.conf:

[root@localhost ~]# vi etc/sysctl.confvm.max_map_count=262144

并执行命令:

sysctl -p

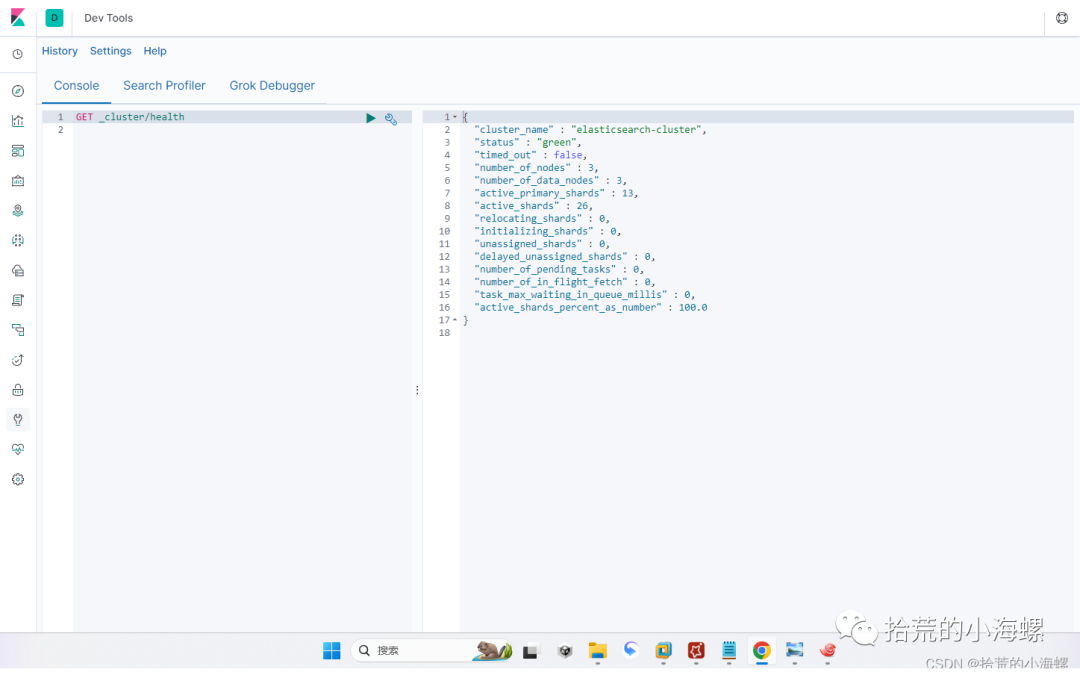

通过_cluster/health查看集群情况:

[root@localhost ~]# curl 192.168.254.129:9200/_cluster/health{"cluster_name":"elasticsearch-cluster","status":"green","timed_out":false,"number_of_nodes":3,"number_of_data_nodes":3,"active_primary_shards":13,"active_shards":26,"relocating_shards":0,"initializing_shards":0,"unassigned_shards":0,"delayed_unassigned_shards":0,"number_of_pending_tasks":0,"number_of_in_flight_fetch":0,"task_max_waiting_in_queue_millis":0,"active_shards_percent_as_number":100.0}

当前"status":"green"是正常的。

随机启动:

[root@localhost ~]# docker update es1 --restart=alwayses1[root@localhost ~]# docker update es2 --restart=alwayses2[root@localhost ~]# docker update es3 --restart=alwayses3

3、Kibana 安装

创建kibana目录:

mkdir -p data/elk/kibana/config

添加配置kibana.yml:

[root@localhost ~]# vim data/elk/kibana/config/kibana.ymlserver.name: kibanaserver.host: "5601"elasticsearch.hosts: [ "http://192.168.254.129:9200","http://192.168.254.129:9201","http://192.168.254.129:9202" ]xpack.monitoring.ui.container.elasticsearch.enabled: true

启动kibana,:

docker run --name kibana -p 5601:5601 \-v data/elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml \-d kibana:7.2.0docker update kibana --restart=always

在网页上请求http://192.168.254.129:5601 选择Dev Tools:

4、Redis 安装

拉取Redis镜像:

docker pull redis:5.0

创建配置目录:

mkdir -p data/elk/redis/data

添加redis.conf配置:

vim /data/elk/redis/data/redis.confbind 0.0.0.0daemonize nopidfile "/var/run/redis.pid"port 6370timeout 3000loglevel warninglogfile "redis.log"dir "/data"databases 16rdbcompression yesdbfilename "redis.rdb"requirepass "k8s1989?"masterauth "k8s1989?"maxclients 10000maxmemory 1000mbmaxmemory-policy allkeys-lruappendonly yesappendfsync always

启动Redis:

docker run -d --name redis -p 6370:6370 -v /data/elk/redis/data/:/data redis:5.0 redis-server redis.confdocker update redis --restart=always

5、Logstash 安装

拉取logstash:7.2.0镜像:

docker pull logstash:7.2.0

创建目录:

mkdir /data/elk/logstashcd /data/elk/logstash

启动logstash 来拷贝配置文件:

docker run --rm --name logstash -d logstash:7.2.0

拷贝配置:

docker cp logstash:/usr/share/logstash/config .docker cp logstash:/usr/share/logstash/pipeline .

停止logstash:

docker stop logstash

修改当前拷贝的配置:

关联elasticsearch集群:

========logstash.yml :

vim /data/elk/logstash/config/logstash.ymlhttp.host: "0.0.0.0"xpack.monitoring.enabled: truexpack.monitoring.elasticsearch.hosts: ["http://192.168.254.129:9200","http://192.168.254.129:9201","http://192.168.254.129:9202"]

关联要收集的docker配置:

========pipelines.yml:

vim /data/elk/logstash/config/pipelines.ymlpipelines.yml- pipeline.id: dockerpath.config: "/usr/share/logstash/pipeline/docker.conf"

添加docker.conf配置

========docker.conf:

mv /data/elk/logstash/pipeline/logstash.conf /data/elk/logstash/pipeline/docker.confvim /data/elk/logstash/pipeline/docker.confinput {redis {host => "192.168.254.129"port => 6370db => 0key => "localhost"password => "k8s1989?"data_type => "list"threads => 4tags => "localhost"}}output {if "localhost" in [tags] {if [fields][function] == "docker" {elasticsearch {hosts => ["http://192.168.254.129:9200","http://192.168.254.129:9201","http://192.168.254.129:9202"]index => "docker-localhost-%{+YYYY.MM.dd}"}}}}

启动logstash:

docker run -d -p 5044:5044 -p 9600:9600 --name logstash \-v /data/elk/logstash/config/:/usr/share/logstash/config \-v /data/elk/logstash/pipeline/:/usr/share/logstash/pipeline \-d logstash:7.2.0docker update logstash --restart=always

6、Filebeat 安装

创建目录:

mkdir /data/elk/filebeat

添加filebeat.yml配置:

vim /data/elk/filebeat/filebeat.ymlfilebeat.config.modules:path: ${path.config}/modules.d/*.ymlreload.enabled: falsesetup.template.settings:index.number_of_shards: 1filebeat.inputs:- type: dockerenabled: truecombine_partial: truecontainers:path: "/var/lib/docker/containers"ids:- '*'processors:- add_docker_metadata: ~encoding: utf-8max_bytes: 104857600tail_files: truefields:function: dockerprocessors:- add_host_metadata: ~- add_cloud_metadata: ~output.redis:hosts: ["192.168.254.129:6370"]password: "k8s1989?"db: 0key: "localhost"keys:- key: "%{[fields.list]}"mappings:function: "docker"worker: 4timeout: 20max_retries: 3codec.json:pretty: falsemonitoring.enabled: truemonitoring.elasticsearch:hosts: ["http://192.168.254.129:9200","http://192.168.254.129:9201","http://192.168.254.129:9202"]

给当前配置root权限:

sudo chown root:root /data/elk/filebeat/filebeat.yml

拉取和启动filebeat:

docker run -d --name filebeat --hostname localhost --user=root \-v /data/elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml:ro \-v /var/lib/docker:/var/lib/docker:ro \-v /var/run/docker.sock:/var/run/docker.sock:ro \docker.elastic.co/beats/filebeat:7.2.0docker update filebeat--restart=always

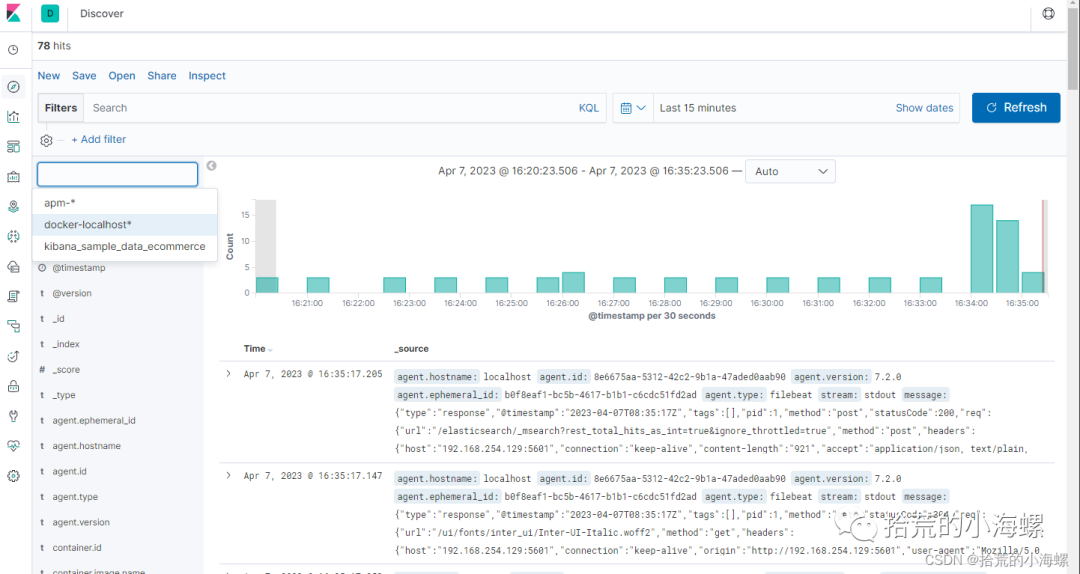

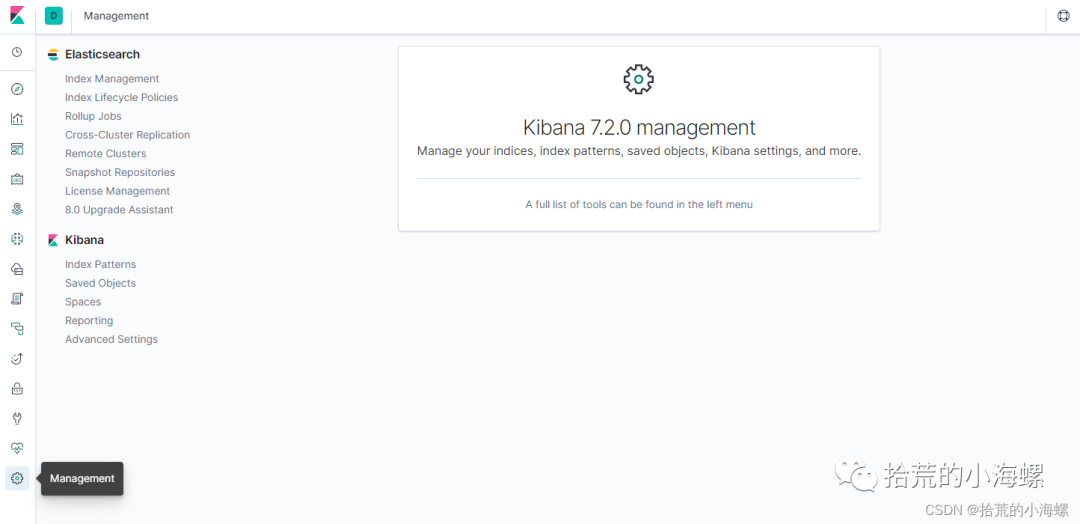

7、Kibana创建搜索索引

1、配置索引

点击Management,再点击Kibana下面的Index Patterns,然后Create index pattern

创建完成后在Discover面板通过filter过滤当前的索引值。